PowerShell is a compelling method for attackers (and pentesters) since code is run in memory and there is no reason to touch disk (unlike executables, batch files, and vbscripts). Projects like PowerSploit and POSHSec prove that PowerShell is the future of attacks.

PowerShell Magazine has a great article on Investigating PowerShell Attacks:

Prior articles by Matthew Graeber, Joseph Bialek, and Will Schroeder did a great job of explaining why PowerShell is so dangerous in the hands of an attacker – particularly given elevated privileges during the post-exploitation phase of an incident. It provides:

- A built-in mechanism for remote command execution

- The ability to execute malicious code without ever touching disk

- The ability to evade many Anti-Virus and Intrusion Prevention Systems

- Full access to WMI and .NET Framework

The unauthorized use of PowerShell presents several challenges to forensic analysts and system administrators alike:

- As a legitimate component of Windows, PowerShell execution does not necessarily indicate malicious behavior.

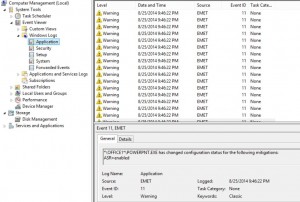

- PowerShell 2.0 does not provide a practical mechanism for detailed (e.g. per-command) auditing. PowerShell 3.0 and later provides comprehensive module logging – but is only installed by default on Windows 8 or Server 2012, which remain uncommon in many corporate environments.

- PowerShell remoting sessions occur in ephemeral process memory with few-to-no persistent artifacts.

- Many system administrators and security professionals remain unfamiliar with PowerShell and its management or security controls.

Faced with these mounting challenges, we decided to research the forensic “footprints” left behind by the ways that an attacker might use PowerShell – a topic for which publicized information is scarce. Our work focused on three fundamental scenarios: local PowerShell execution, PowerShell remoting, and the configuration of a persistent PowerShell backdoor through the use of WMI. We examined multiple sources of evidence, including the registry, file system, event logs, process memory, and network traffic.

Read the rest of the article, Investigating PowerShell Attacks.

References:

Recent Comments